I’ve been wanting to write something up for a while about my thoughts on the idea of “cyclical” climate variations, and in particular to express extreme skepticism about them, but one element in particular. Many, with some degree of enthusiasm, have noted that there is a claimed “cycle” in economic activity with (very roughly) the same periodicity claimed to exist in climate: The Kondratiev Wave.

I’ll be perfectly frank. I don’t think the Kondratiev Wave exists. I don’t think it is an actual, real thing. I think it is complete and total bull crap.

But before I can write up a long ass post explaining why it is complete and total bull crap, I want to just post up some fun things.

As before, I will use US GDP data, with the portion representing Government spending removed, to represent actual meaningful production. Except this time, I really want more data than just back to 1890, so I use simple method to estimate the state and local spending from the federal spending: from 1890-2013, there is a correlation between a change in federal spending as a fraction of GDP and a change in federal spending as a fraction of total spending-the correlation is better in later years, so one should be a little bit weary of extending it back in time. But let’s proceed with reckless abandon nonetheless. The main purpose is to properly restrict what are mostly war spikes to increases in federal spending. I can use this relationship to estimate how much of the total government spending before 1890 was made up of federal spending and how much state and local spending. One can then use the estimated fraction, federal/totgov, with the actual federal spending, to get an estimate of total government spending in years before 1890. Anyway, this is what that fraction looks like, with the estimated portion highlighted:

Note that with this, I can extend the total government as a percent of GDP back to 1792. However, the GDP data go back to 1790. After converting from percent of GDP to billions of dollars-and deflating the series to constant 2013 dollars-I observe that the first couple of years saw a slight declining trend. I simply assume 1791 was about 2.2% higher than 1792, and the same for 1790 relative to 1791, to extend the data backwards. This is less than satisfactory, but I wanted to be able to have a reasonable estimate for every year. Anyway, I then subtract those values for total government spending from GDP. For those of you who haven’t taken an undergraduate macro course: well, first of all, don’t, if you are at almost any University in the US. Second, GDP is defined as the sum of all final expenditures by: Consumers (C) Government (G) Investors (I) and the difference between Exports and Imports (“Net Exports”) (NX). So we are basically talking about GDP – G, or C + I + Nx. As measures go, this has a few things to recommend it over GDP including G. But it depends what you are trying to “measure.” And it still suffers from a number of defects. Nevertheless, as a measure of economic growth and fluctuation, I find it nigh infinitely superior.

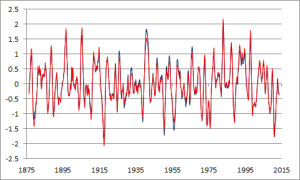

Anyway, frequently, economists refer to fluctuations in GDP as representing an “output gap”-this basically refers to the percent departure of the GDP from a long term trend curve. There are lots of ways to calculate a long term trend curve, and how you do so determines a great deal about what you will conclude about cyclical variations in output. It’s also questionable whether the entire thing is a very meaningful concept, or more specifically, what meaning to attach to the long term trend curve. My current think is somewhere between that it is meaningless data torture, and that it represents just a proxy for progress. Again, let’s proceed with reckless abandon regardless.

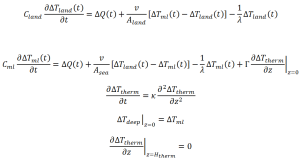

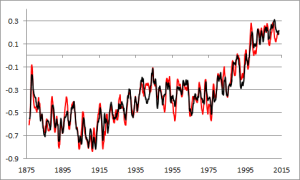

My method for removing the long term trend line has as a goal not removing anything that might conceivably represent a short term variation. So I want a highly aggressive filter. Oh say, I’ve got that. Specifically, I take the following steps: I take the growth rate year over year, for each year relative to the previous. I then lag that back one year. For all years but the first and last, I average those two series. For the first and last, I take the average of the next two, and previous two years, respectively. Then, I iteratively smooth it: I take three point centered averages, with the first and last points double weighted to extend the centered averages to the end of the series, 1110 times-that is 10*(years-2)/2 (since years happens to be 224, an even number). I then use this final smoothed series of “long term growth rates” to create a compound growth curve starting at 1 in 1789. I multiply this by a factor suggested by regression against GDP-G. I then take the ratio GDP-G/TREND1, This seemed to consistently under estimate values in the first half of the data. So I did the smoothing on that ratio 1110 times, and multiplied TREND1 by that factor, which was the new estimate of the long term trend. Then I take the ratio of the actual GDP-G to the trend curve, to estimate the “output gap”:

Some things stand out: One, we are currently about 9% under trend. That is pretty bad, although not exceptionally bad. Another thing that stands out is the Great Depression. Actually, it’s probably the first thing that jumps out at you. What you might not recognize, is what is going on in the 1940’s, when it spikes below trend again? That’s what I like to call the “War Depression.” War Depressions are actually common feature in much of the data-notably associated with the War of 1812, the Civil War, World War 1, and World War 2 (after that, wars no longer stand out as times of exceptional government displacement of economic activity, which becomes the peace time norm). In the case of the War Depression of the 1940’s, it’s a Depression you’ve never heard of. That’s because people didn’t lose their jobs. In fact, employment grew, because the Government drafted people into the military and made exceptions for war time production jobs, and so on. But private investment was way down-this wasn’t growth, this wasn’t a consumer economy, this was a Soviet style Command economy. Instead of people choosing between scarce means to their own ends, the Government choose between means to it’s ends. In that sense, and in the sense that people live an austere life under rationing and price controls, this was truly a depressed economy. You might call it the opposite of a jobless recovery. A jobful depression. As Hayek says in the Keynes v. Hayek rap, round two, “Creating employment is a straightforward craft, when the nation’s at war, and there’s a draft. If every worker was staffed in the army and fleet, we’d have full employment-and nothing to eat.” And let’s be clear about that: the intervention of the Government caused those conditions. No ifs, ands, or buts. In 1942, GDP-G was at trend-or at least, only slightly below, to the point of statistical indistinguishability. And it took a considerable growth rate to get there, since this is coming out of the Depression. The ramp up in War spending-mostly after 1942-didn’t end a Depression that was already over. It created a new one that it hid in the standard statistics. But when the spending on the war ended, when the Government lifted a lot of war time price controls, rationing, and other things that-as I said before-made it a Soviet Style Command Economy-massive cuts in Government spending were associated with expansion of investment-and that’s an important point, this wasn’t “pent up consumer demand” that merely offset decreased Government spending-this was a booming recovery of investment, less than it was consumption. And why not? Frankly, the situation both during the Depression and the War was, from the perspective of the investor, terrifying as hell. This is documented fact. And when you combine the War Depression and the Great Depression together, they make the single longest slump of non Government output below trend in US history, at 18 years from 1930-1947. Contrast that with the over trend boom periods from 1879-1893 (15 years) and 1895-1913 (19 years). I note the latter two periods for a couple of reasons: first, conventional wisdom is that an over trend economy must reflect an “inflationary gap”-with demand generally outpacing supply driving up the price level. But during the first period, the deflator decreased almost 9%-the average inflation rate was about -0.6%. The second period, despite being well over trend for a long time, was just ~1.8%-from 1879 to 1913, the price level increased only about 23.5%. Compare to 1979-2013, which saw an increase of 261.3%. And that was emphatically not a period where every year but one was above trend! Second, that period, 1879-1913, popped out of the analysis. I did not go fishing for it. But it happens to correspond to the period associated with the classical gold standard. Like, pretty much exactly. So that seems to me a pretty strong indication that, at least back then, a gold standard worked quite well, in the sense that it allowed strong, persistent economic development, even above and beyond the long term growth rate, with long term stable prices. I think there are a lot of questions about how good it would be to reinstate it now-especially unilaterally. Notably, the performance under the Gold Exchange Standard was not very good at all, or Bretton Woods for that matter-however, a strong case can be made that the major shift in monetary policy in 1913-internationally, in the demise of the classical gold standard, and in the United States, the creation of the Federal Reserve system-was a shift to an inferior system, and certainly the alleged goals of the creation of the Fed were not actually achieved. Notably, the claim in Econlib’s Gold Standard article that the economy under the Fed, at least after WWII (the interwar years generally being handwaved as “practice” before Central Bankers allegedly became wise and enlightened) is dated. More up to date, mainstream analyses (not my analysis either, people like Christina Romer-not exactly a Right winger) the volatility of the pre-Fed era has generally been over estimated. The same is true for what it says of unemployment. Note that a much less powerful attenuation filter is used to assess volatility relative to trend than I do. Though I do find that the entire Fed period has a greater standard deviation of the “output gap” than the 100 prior years, it appears that the data I use and the method for removing the trend does show “improvement” in decreased volatility if you compare 1946-2013 with the 68 years before the Fed. On the other hand, the standard deviations for 1879-1913 and 1979-2013 are nearly identical-the latter period is only very slightly lower. Factoring in the possibility that my method is leaving in things that really shouldn’t count as “volatility,” the possibility that the Measuring Worth data suffers from defects that cause it to intrinsically over estimate past volatility (which may or may not be the case) because of their methodology, and the possibility that recent economy has been “luckier” in terms of avoiding supply shocks, and there really is not much evidence here that the Fed stabilized output relative to the Gold Standard-although it does appear to have depressed output relative to the Gold Standard. Well, to be fair, that could be because of the larger government, and not the monetary policy. Similarly, as that paper I linked points out, the larger Government “theoretically” should reduced volatility, as well as reducing growth-acting essentially as a poor man’s good monetary policy. I’m not sure I buy that, since there isn’t much reduced volatility to attribute in the first place.

Hm, I’m rambling quite a bit. Why was I writing this again? Oh, right, I just wanted to describe the data I’d be using for my post on the (non) existence of the Kondratiev Wave. Anyway, we’ll revisit that later. For now, there are several interesting things for readers to ponder.

Also this was a great opportunity for me to ramble on about economics on what is ostensibly a science blog. 😉